Opening the black box: The learning journey that led to Ai CSAT Explanations

Head of AI Research

Tags

Share

Last month, Dialpad launched Ai CSAT Explanations, one of the most meaningful advances in our Ai platform to date. Here’s the story behind it, and why it matters so much to anyone who wants to build a better customer experience.

Why CSAT matters (and where it falls short)

Let’s say you order something online. It’s supposed to arrive in 24 hours—but three days later, it’s still missing. Frustrated, you call customer service. A chatbot picks up. You tell the bot your sad story. But does the software really know you’re upset?

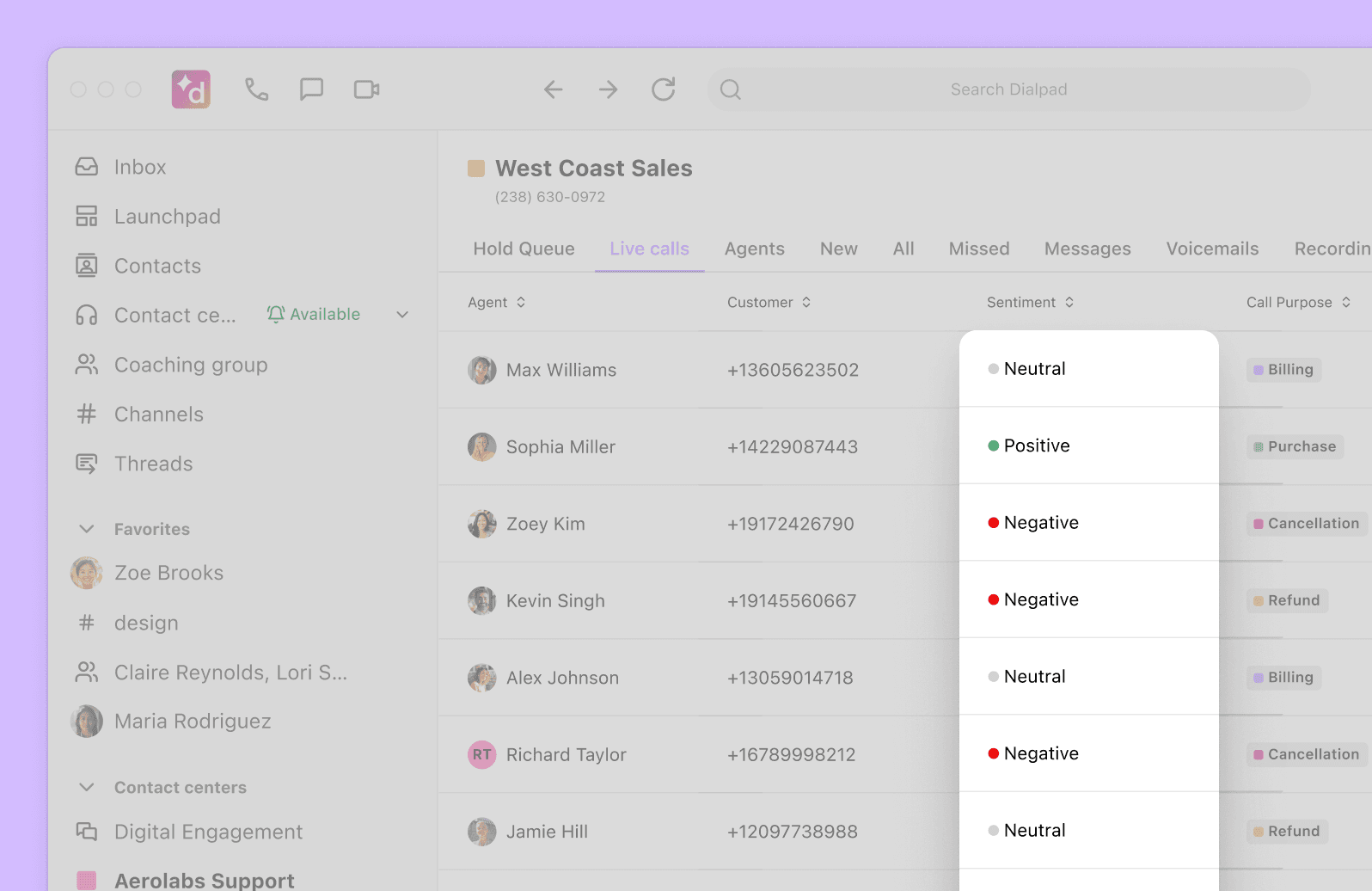

This is a central challenge of Ai development in a service-oriented industry for which customer satisfaction (CSAT) scores are a go-to metric for measuring service quality. A busy contact center might receive thousands of daily calls from customers who are happy, neutral, upset, and everything in between. Ai’s ability to understand these emotions—what the industry calls sentiment analysis—is the starting point to providing great customer service at scale.

At Dialpad, we’ve included CSAT scores as part of our call center platform for years by prompting customers to rate their experience after the call. But only a small percentage of people respond to post-call surveys, and those who do respond tend to give highly polarized scores—mostly 1s or 5s. This lopsided distribution doesn’t mean most callers loved or hated their call; it means that callers who loved or hated their call are more likely to care enough to answer the survey. All the 2, 3, and 4-rated transcripts lost to this response bias are missed opportunities for agents to follow up with dissatisfied customers, and for managers to coach agents on how they can perform better in future calls.

To capture all this knowledge, we had to build Ai CSAT.

The importance of explainability

To understand writing, large language models (LLMs) must navigate potholes ranging from imperfect transcriptions to cultural nuances. One of our Ai team’s favorite learning moments came when we figured out that one of our models was rating call sentiment in Australian transcripts as alarmingly bad when the actual call went fine. Apparently, some curse words, when spoken Down Under, simply mean excitement rather than anger. Clearly, teaching a model to deliver reliable sentiment analysis on any call transcript, regardless of topic, culture, or language was no small undertaking.

So Ai CSAT was no small achievement. Launched in June 2022, Ai CSAT was trained on the transcripts and grades of more than a million customer calls to be able to read and interpret transcripts and give 1-to-5 CSAT scores for nearly 100 percent of calls, saving countless hours of customer time and offering agents and managers vast troves of new information.

It was a huge step forward. It was cool that Ai CSAT could assign a score to any call transcript. But that breakthrough uncovered a new need: while our model could give a call a CSAT score, it couldn’t explain why it chose that particular score. So when an upset (or even just curious) customer asked, “Why was this call rated a 1?”, we couldn’t provide that information. In order to be able to offer a valuable, Ai-powered understanding of customer sentiment, we needed to go one important level deeper.

Opening the black box

The final piece of the sentiment analysis puzzle is the kind of commonsense idea that’s actually a huge undertaking: if our problem is that Ai CSAT is delivering CSAT scores but no reasons for those scores, why not train it to first find the reasons for a call’s quality, and then choose a CSAT score accordingly?

Here’s how we put together the process for our updated CSAT tool. We started by identifying seven core categories that influence customer satisfaction on any given call:

Completeness of resolution—Was the customer’s issue fully resolved?

Agent knowledge—Did the agent fully understand the issue?

Agent empathy—Did the agent show caring and concern?

Agent communication—Was the call itself clear and effective?

Customer sentiment—When the call ended, was the customer happy with the outcome?

Agent speed and efficiency—How quickly was the issue handled?

Operational friction—Did issues outside the agent’s control (like a bad transfer or system outage) impact the experience?

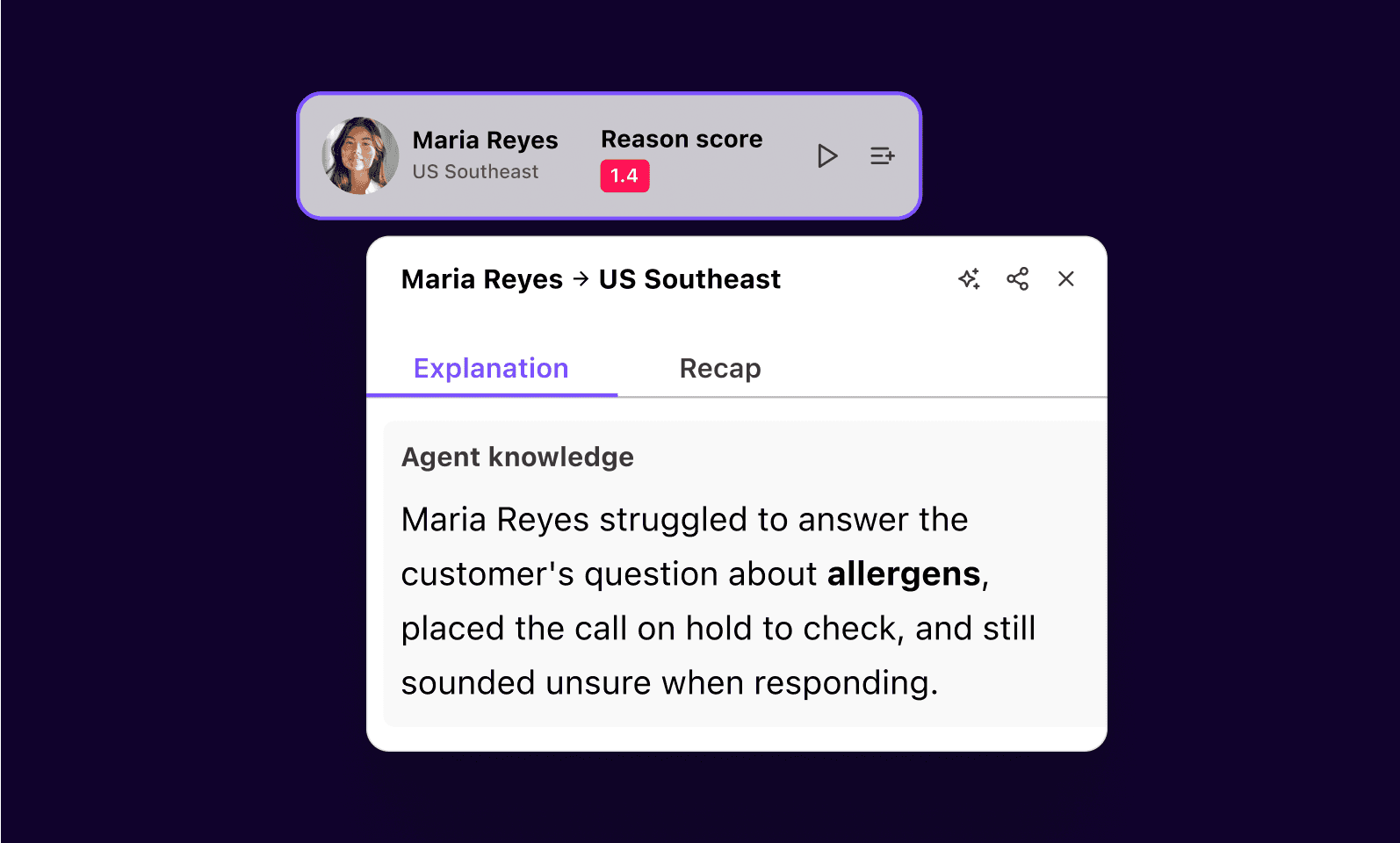

Ai CSAT Explanations first studies a call transcript. Then for each of these seven categories, it makes a quality judgment, generates a score from 1 to 5, writes a one-paragraph explanation of why it chose that score, and tags the issue that caused the low (or high) score with a one- or two-word “sub-category”; e.g., [billing issue] or [product confusion].

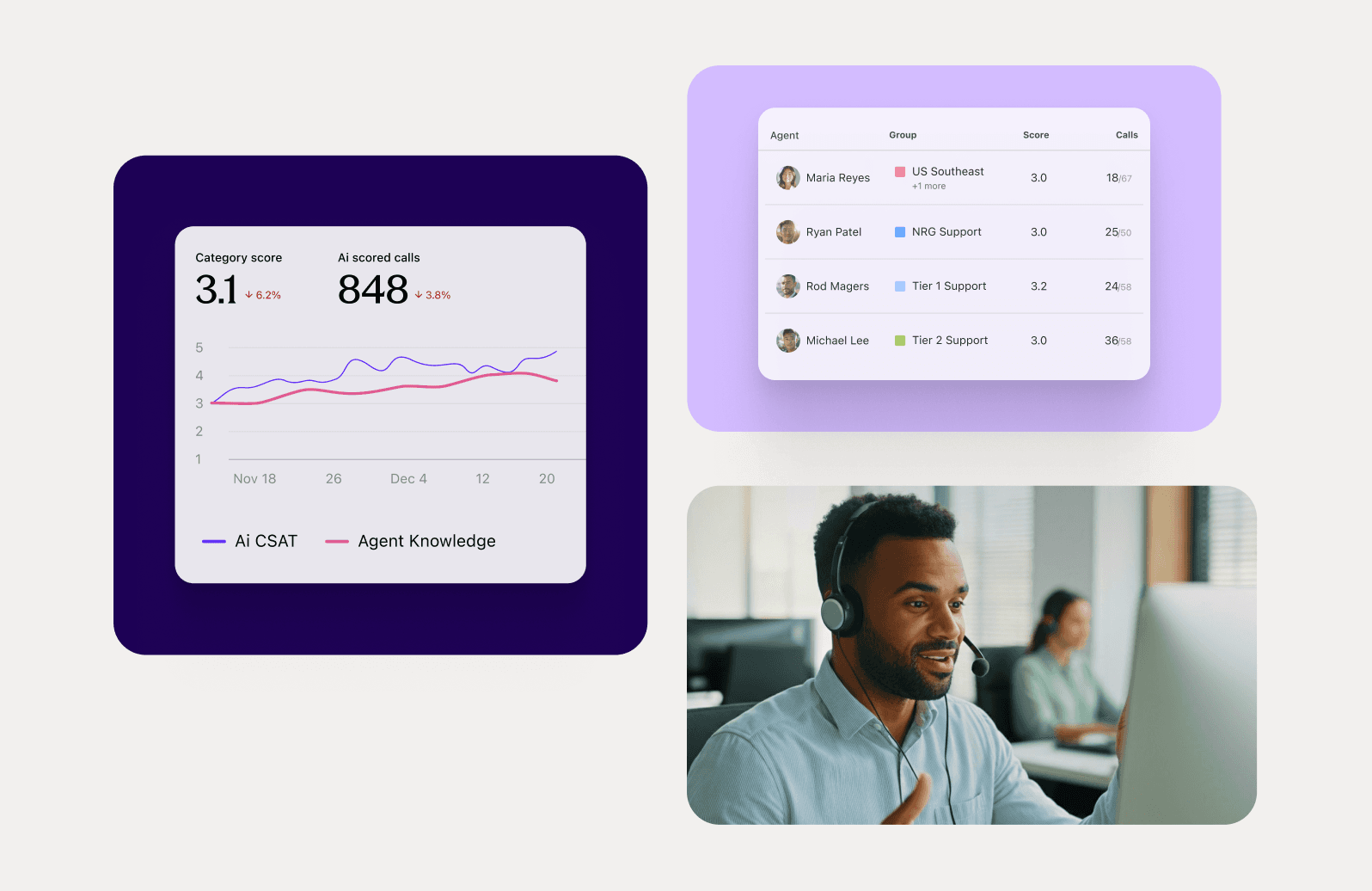

Finally, the model averages those seven scores into a final CSAT composite. Meaning that, for almost every customer call that takes place on Dialpad (rare exceptions being extremely abbreviated calls, hangups, etc), we can generate not just a CSAT score, but a detailed explanation of how we arrived at that score, and actionable insights that help agents and supervisors drive CSAT even higher.

From scores to strategy

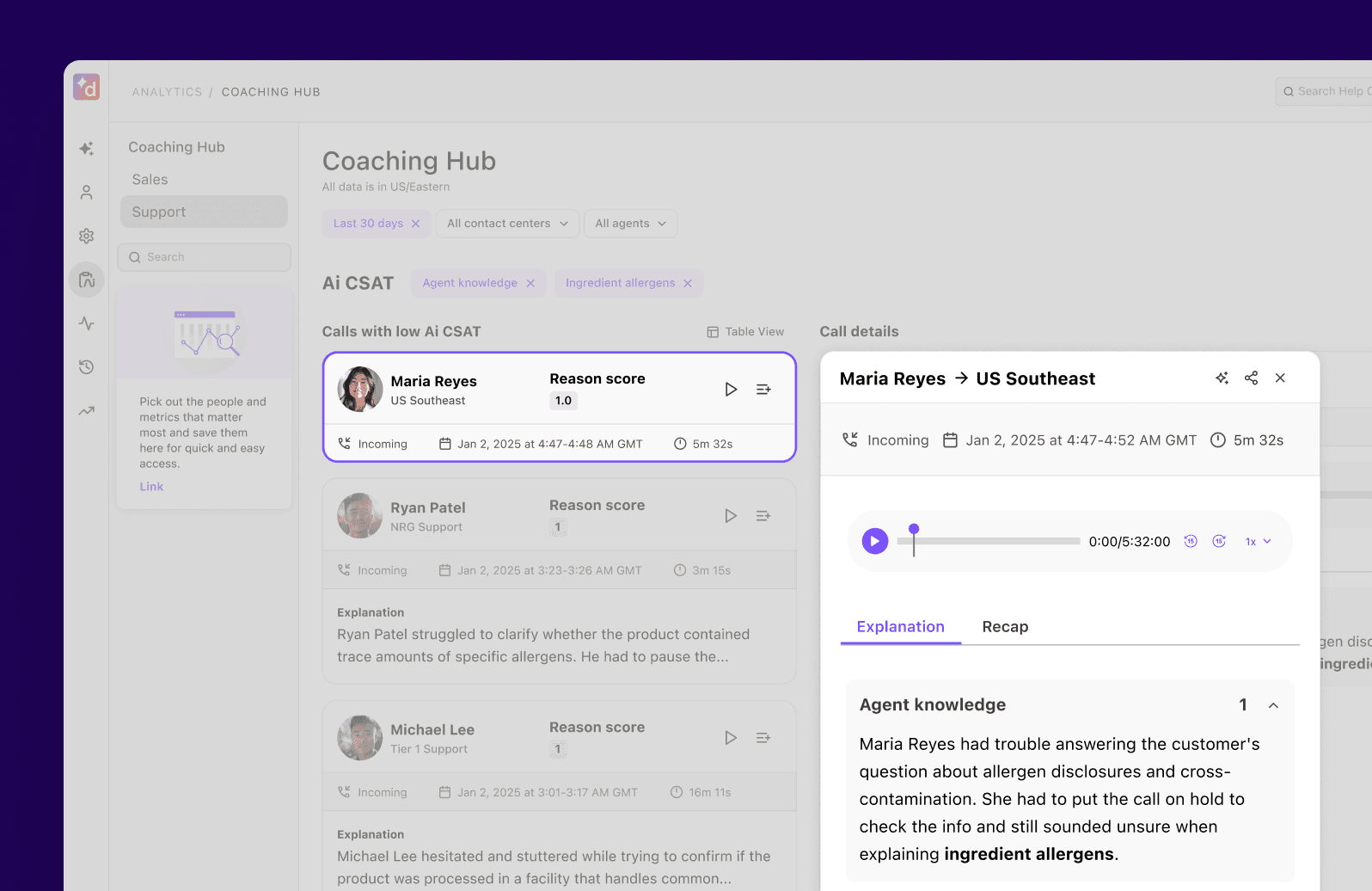

The real power of CSATx isn’t just better scoring; it’s how the explanations behind the scores can surface patterns across thousands of conversations. The Ai CSAT Explanations Coaching Hub shows you which categories contributed to lowering your call center’s overall CSAT score, how many calls certain problems impacted, how much they lowered your score by, and more.

For instance, suppose Ai CSAT Explanations tags a group of calls from a certain call center as [SMS registration] issues and gives them generally low Ai CSAT and resolution scores. Working with Ai CSAT Explanations, supervisors studying that call center’s performance can track not only how often the [SMS registration] issue occurs, but how much it hurts the team’s overall CSAT, and thus how much opportunity there is for improvement.

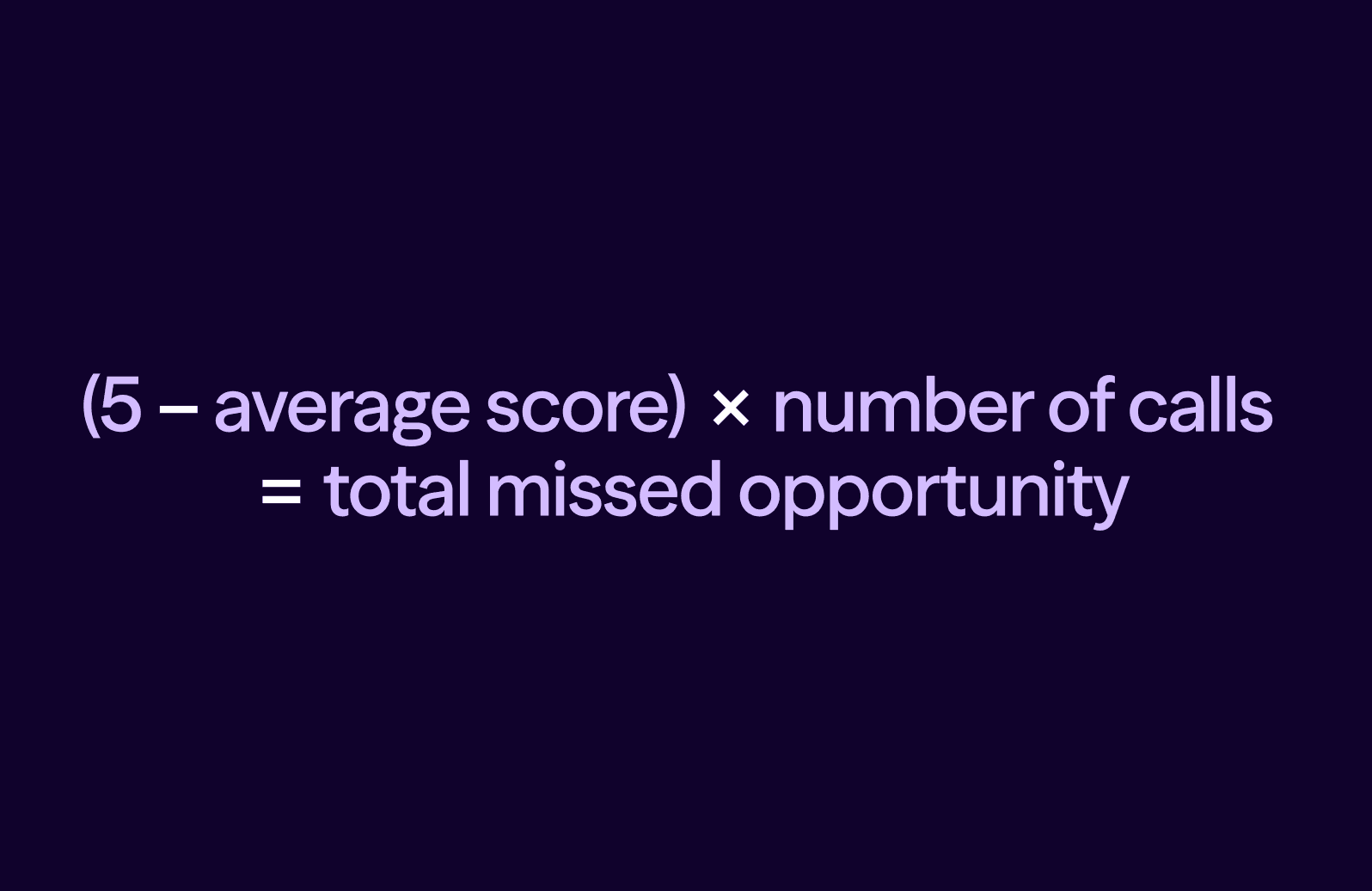

We call this metric “total missed opportunity.” Here’s how we calculate it:

Rank those missed opportunity totals by sub-category and you’ve got a clear guide to where you can focus your coaching and process improvement to make agents as effective as possible, and customers as happy as possible.

Customers can also filter results across those seven distinct categories, ensuring that insights align directly with their unique priorities. For example, one business might care only about customer sentiment, while another might be interested primarily in issue resolution speed. A third might want to drill into empathy or resolution rates. They can select the categories most relevant to them and drill down to understand what’s driving success for those metrics and also holding them back.

Human-Ai teamwork, by design

Going forward, we plan to make Ai CSAT Explanations even more customizable, with dashboards, alerts, and coaching tools that put these insights directly into the hands of agents, supervisors, and CX leaders. And we’re exploring more categories, smarter aggregations, and customizable reports that give teams full control over how they use their customer data. Imagine one customer wants their final CSAT score to reflect only customer sentiment, while another needs their overall score to include sentiment, agent knowledge, and resolution speed. They both should be able to tailor Ai CSAT Explanations precisely to their needs.

After all, perhaps the most important thing we learned during this CSAT journey is that no matter how good Ai gets at analyzing transcripts, it won’t always be able to replace the human factor in customer care. Empathy, adaptability, and trust-building are inherently human strengths that Ai CSAT Explanations is designed to support, not replace.

Because your customers don’t just want answers; they want to be truly heard. Now, finally, Ai can help you do both.