“GenAi for the People: A Framework for AI Transformation”

Head of AI Transformation

Tags

Share

ChatGPT burst onto the scene in late 2022, and AI has held the spotlight ever since. Sure, we’re seeing “AI fatigue” in some corners. But serious curiosity and heavy investment from entrepreneurs, managers, and specialists is stronger than ever.

My inbox is living proof. Every week I field a steady stream of questions about how individuals, teams, and companies can make the leap to an “AI-first” mindset. It’s a big question. In this post I’ll offer an answer: a framework you can apply to your organization’s AI transition, whether you’re a startup founder, a 10-person crew, a multinational enterprise, or anyone else. AI is now omnipresent and essential, our era’s Swiss Army Knife. We all must become capable of using this new tool, which includes imagining new ways to use it. Generative AI truly is—and must be—for the people.

Here’s a flow chart for making this grand aspiration real.

AI transformation outline:

I. Governance squad

II. Use case pilots

III. Upskilled workforce

IV. Measure, scale, promote

Part I: Governance squad

Appoint an organization-wide AI governance squad

“AI for the people” starts with assembling a governance squad comprising experts from throughout the organization (IT, legal, HR, product, sales, marketing, etc) with authority to draft policies, approve budget and use cases, and monitor risk.

Define your big-picture AI goals and strategy

The governance squad’s first task is to write an AI mandate that’s clearly aligned with your existing business strategy and KPIs. What are your organization’s goals for AI? Increasing efficiency and productivity? Upgrading existing products? Creating new ones? Make the vision clear in writing.

Allocate cross-functional budget from Day One

Make sure the governance squad and all related teams have sufficient approved budget to select and run a series of 4-6 week pilot programs for potential AI projects.

Write AI principles mapped to existing and emerging regulations

Governance principles—a concise set of guidelines that establish your organization’s position on AI transparency, fairness, privacy, safety, and human oversight— shouldn't be the last boxes on your AI checklist, but the ideas you start with, consult during the process, and revisit into the future.

Your AI principles will address your organization’s privacy, security, and legal risks; impact your customer experience and product development; and help you navigate financial and reputational stumbling blocks. Think these principles through deeply and make sure they’re mapped to existing relevant AI regulations.

Part II: Use case pilots

In 2022 the primary goal of AI adoption was to identify use cases. Today it's about executing a small number from thousands of potential choices.

Your AI team should begin this process by compiling lists of possible use cases from two basic types: value drivers and enabling programs.

Identify existing tasks and processes that value drivers can address

Value drivers boost productivity (top-line growth) or savings (bottom-line improvement). These are projects that earn short-term kudos: you run a pilot, show ROI impact, and wind up with a great story to tell.

Ex: A model that writes product copy, blog posts, meeting recaps, etc.)

Ex: A customer-facing chatbot to handle first-level support questions.

Choose the enabling programs that are best aligned with existing business goals

Enablers drive innovation by empowering anyone to explore new ideas.

Ex: A program teaching basic prompt writing, tool landscape, etc.

Ex: A peer-to-peer community for sharing AI ideas and best practices

Compile a list of potential enabling programs that might best serve your company’s long-term vision and goals.

Advance use cases which pass the 4-question litmus test

With your list of potential value drivers and enabling programs in hand, ask these four questions about each potential project:

Is the task repetitive?

Do you find yourself doing this task frequently, even routinely?

Is the output text-heavy?

LLMs are trained on text, so text-oriented use cases yield the best results. Models for images and video still require advanced prompting skills.

Do you have good data?

Good AI results require good data. The more data your instructions include, the better your results will be—as long as that data is high-quality.

Is the project low risk?

Avoid confidential data; demand human

oversight over the AI process.

Cases that earn a ‘yes’ for all four questions reach the next stage of the contest.

Assign resources to the highest-value, lowest-effort pilots

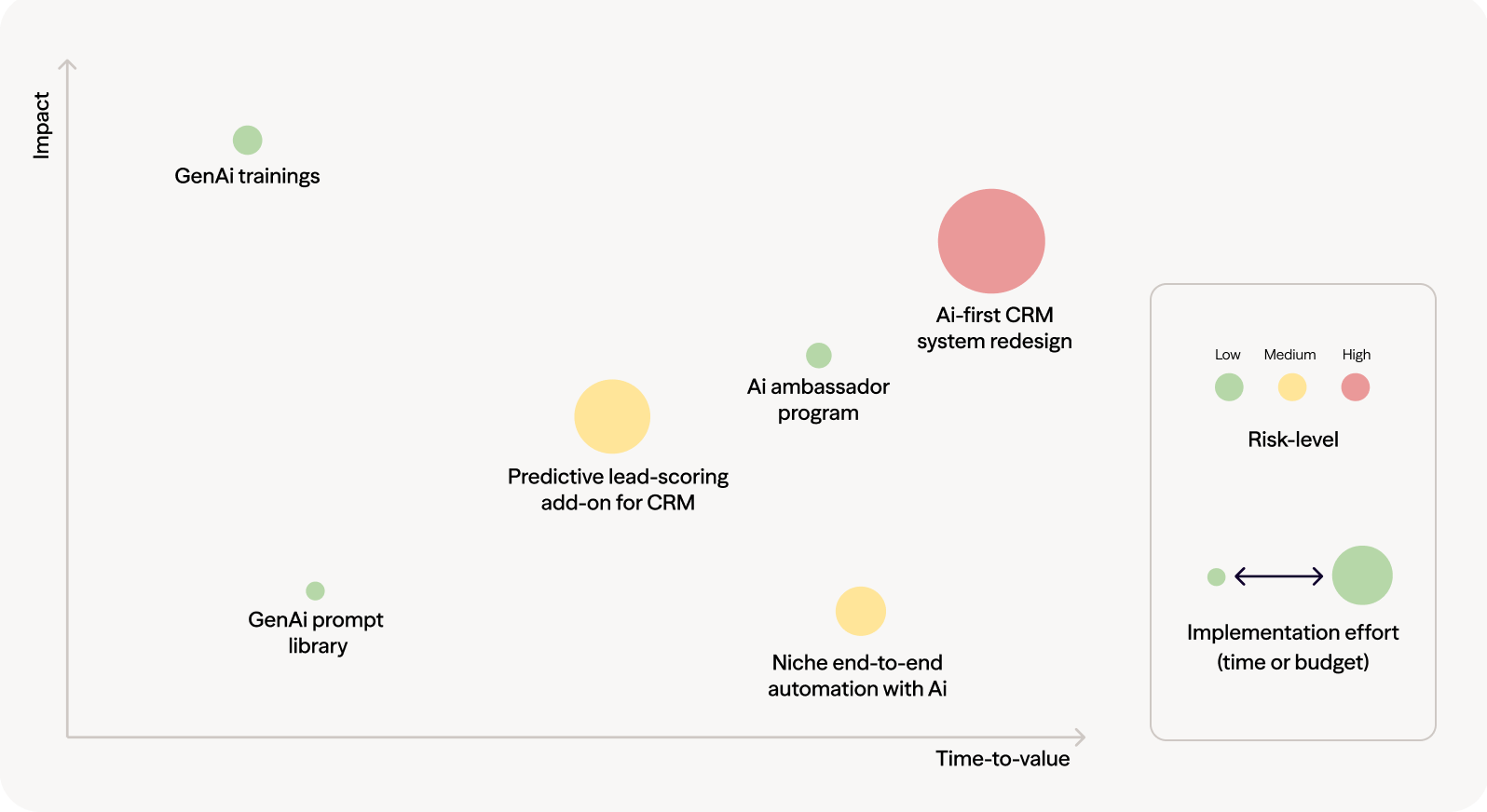

Now plot your remaining candidates in a matrix that maps the value you could derive from each project against the effort required to test-drive it:

Hold discovery sessions to choose use-case tools

What tools should you use to launch your chosen use-case pilots? Hold discovery sessions that give department experts a chance to figure this out. Absent those experts, ask Gemini or ChatGPT to research the problem. Using out-of-the-box AI tools to build your bespoke AI tools is very 2025. 🚀

Define success metrics, embed human-in-the-loop review

Set KPIs in advance that define each pilot’s success or failure, along with disciplined oversight of processes and measurement.

Part III: Upskilled workforce

At a recent Dialpad training I posed a simple question: ‘How confident are you about using AI GenAi at work?’ Here’s the chart we came up with.

Notice how even an AI-forward company like Dialpad has more people on the left who aren’t very confident than we have confident folks on the right. This is a typical distribution; getting started is where we often get left behind.

How to move your org’s AI knowledge graph from left to right? It’s fine to start with AI’s why, but the key is making sure AI pioneers show everyone else, in careful detail, what they can do with AI and how they can do it.

Launch programs that protect data and promote innovation

GenAi 101 and hands-on labs

People who want to try AI are going to do so, on work machines or, if need be, personal devices. Encourage their curiosity with an enterprise-licensed, SSO-protected version (or an internal RAG sandbox) before considering blocking consumer apps. If you’re on Microsoft, use Copilot. For Google Workspace, go with Gemini. If neither, ask Anthropic or OpenAI for help with data protection and privacy questions.

Layered risk controls

Stage | Control | Tooling ideas |

|---|---|---|

Pre-deploy | DP-impact assessment, red-team prompts, policy-as-code gate | DPIA template, OPA/Conftest |

Runtime | Inference firewall (toxicity, PII, jailbreak) | LlamaGuard, Prompt Armor |

Post-deploy | Drift & misuse monitors; bias/equity audits | WhyLabs, Arize, custom SQL alerts |

Provide sandbox tools and prompt libraries

Here are some ways to get AI to the people while protecting the company.

Prompt library and cheat sheets

Team-curated examples with safe variables already tokenised.

Hands-on clinics

Monthly drop-in sessions to debug prompts and discuss new tools.

Self-serve sandbox

Playground that logs everything but never writes to prod data.

“Safe-share” pattern

Built-in redaction button for screenshots / text fed into public models.

Rapid approval lane

48-hour review for new SaaS tools so innovators aren’t stuck in limbo.

Set clear expectations for AI capabilities

That introduction needs to include candor about AI’s limitations. When rookies think they can put one line into ChatGPT and get back a perfect blog post, then read their first AI response and it's terrible, adoption plummets. Set realistic expectations from the outset about what AI can and cannot do.

Part IV: Measure, scale, promote

Run sprints for chosen use-case pilots

You’ve prioritized use cases and set clear metrics that each one will be expected to meet. Now the fun begins, as teams run each project through a 4-6 week sprint.

Integrate successful pilots; retire failed ones

Check the numbers. Did each project’s KPIs validate its business case? If so, great! Now swiftly integrate this scrappy solution into core workflows, so it gets the traction it needs to achieve lasting impact.

And if the project missed its metrics, no problem—the experiment delivered a valuable result. Learn from the data and start over with another use case.

Share success stories and lessons learned

Promote your winners! Sharing AI success stories keeps adoption rates steady or growing.

Revisit principles and roadmap as AI landscape evolves

AI transformation isn’t opt-in, it’s auto-renew. Your last step is to revisit the earlier steps. Business needs shift. Principles need updates. Colleagues will join the AI team, others will leave. New use cases need assessment, old ones need retiring. Your roadmap and its KPIs need constant adjusting.

Commit to the cycle, and keep your organization pursuing the amazing.

AI transformation checklist

I. Governance squad

Appoint an organization-wide AI governance squad

Define your big-picture goals and strategy

Allocate cross-functional budget from Day One

Write AI principles mapped to existing & emerging regulations

II. Use case pilots

Identify tasks & processes that value drivers can address

Choose the enabling programs that are best aligned with existing business goals

List use-case candidates that align with business goals

Advance use cases for which you answered “yes” to all 4 questions

Assign pilot resources to highest-value, low-effort candidates

Hold discovery sessions for experts to choose use-case tools

Define success metrics, embed human-in-the-loop review

III. Upskilled workforce

Launch programs that protect data & promote innovation

Provide sandbox tools & prompt libraries

Set clear expectations for AI capabilities

IV. Measure, scale, promote

Run sprints for chosen use-case pilots

Integrate successful pilots; retire failed ones

Share success stories & lessons learned

Revisit principles & roadmap as AI landscape evolves